PEW

Project Overview

In Spring 2024, I participated in a neurotech hackathon and worked with 4 other teammates to create a VR application that utilized EEG / EMG signals. Our goals were clear but ambitious:

1. Build a functional application on Unity with designing for VR in mind, considering factors such as motion sickness and control modes.

2. Calibrate EMG signals to incorporate as part of the application's weapon-switch feature.

3. Build the application to MetaQuest 2 !

Our project was big because we wanted to explore all of the interests of our team-- with members studying EE, Math, CS, and Chem-- we decided to incorporate all of the tools that the hackathon provided to us. Most of us were new to the tools, but we had so much fun learning and helping each other. We won second place!

Tools

Project Type

TEAM

The Process

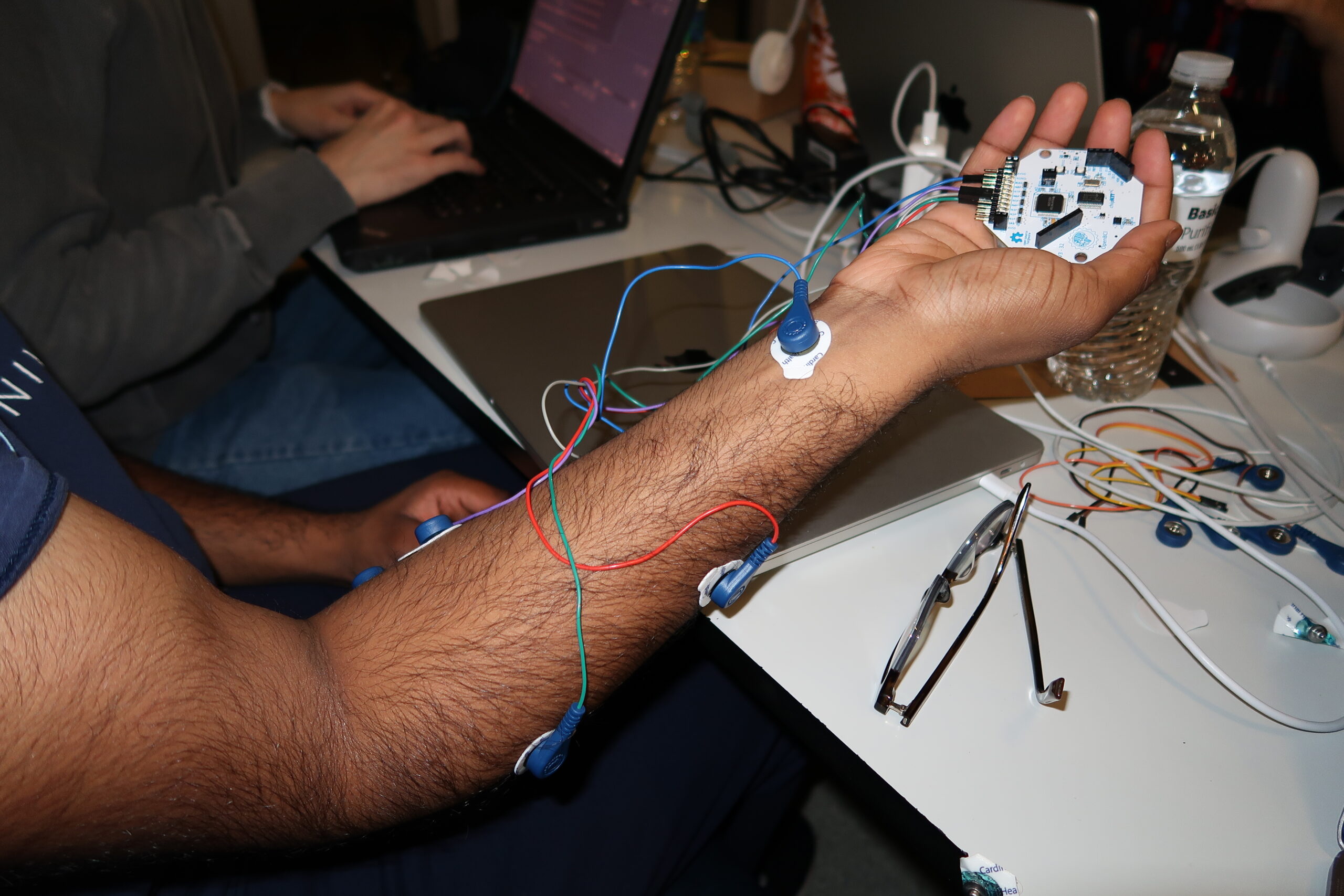

Our team split into two main groups: development on Unity and working with the openBCI tools to calibrate EMG/ EEG signals. I was part of our development subteam with two other members. Because we only had the weekend to put together our project, the game functions are basic and we prioritized the features we agreed were most important for the user.

We were provided with a foundational scene and 3D models to use for our gameobjects in Unity, so we focused on implementing the game logic and mechanics.

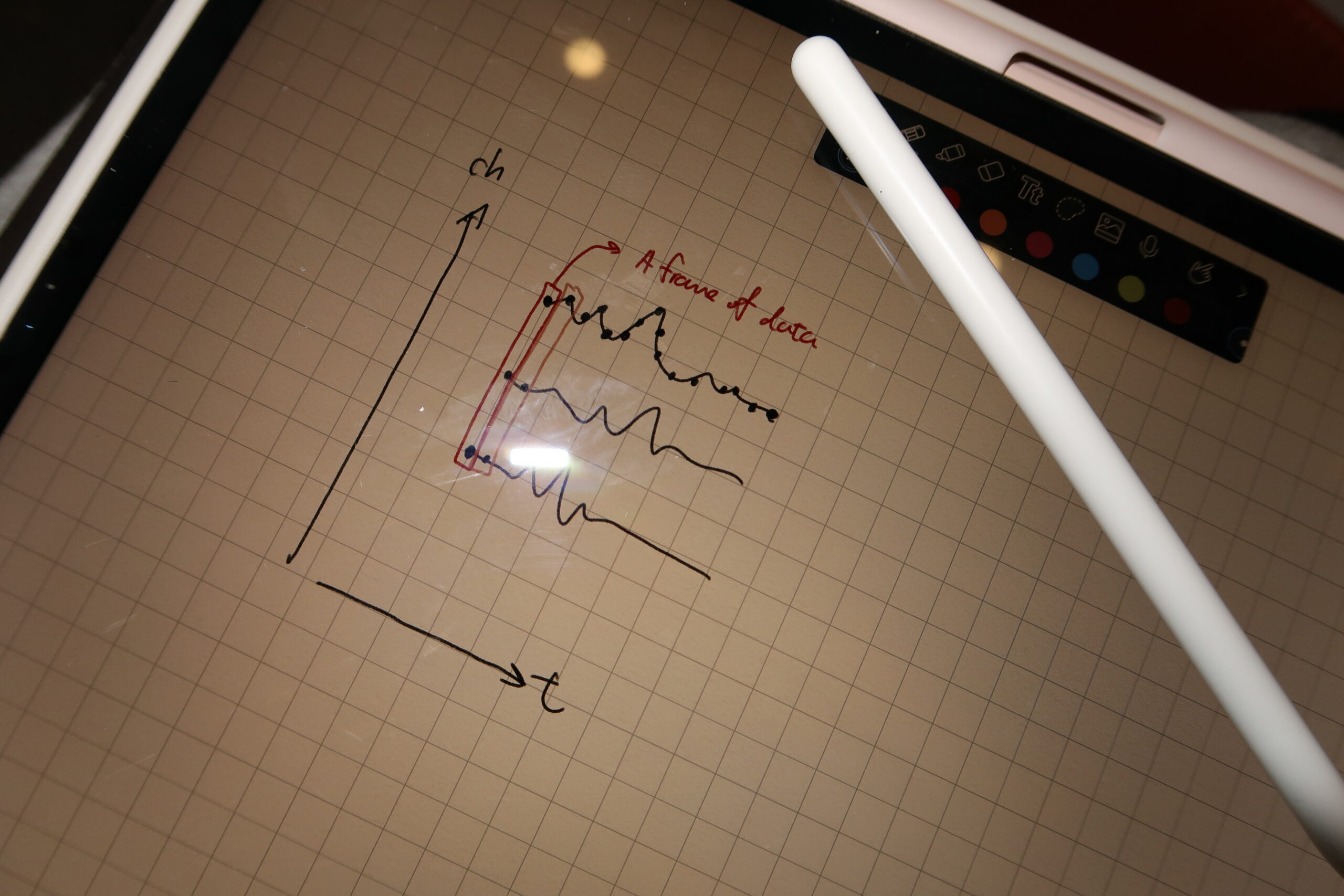

The first hurdle of the project was figuring out a way to reliably stream raw BCI and EMG signals to our own computers, by calibrating the given software and hardware.

We wanted to utilize the recommended Lab Streaming Layer (LSL) to integrate g.tec's Unicorn BCI into our Unity project, but we had issues sending LSL messages to Unity. So, our group decided to try establishing a TCP connection instead-- which worked perfectly. Ultimately, due to its quicker and efficient delivery, we changed the connection method into UDP. We also had troubles decoding EEG signals due to noisy data.

The Product

Although the app is fully-functional on any laptop, PEW connects to the Meta Quest 2 to provide an immersive VR game experience. Users can play handless by recording BCI and EMG signals live, which will be sent as data input to Unity using a UDP server connection; BCI signals are used to motionlessly kill robot targets by "focusing" on one robot (or accelerometer signals can be used if real-time EEG signals are too noisy to decode), and EMG signals to seamlessly switch between three different weapons that provide different attacks.

PEW uses a UDP connection to input data into Unity after preprocessing BCI/EMG signal to send a UDP message from the client side (player) using a Python script within PhysioLabXR. The server side was implemented using C# as it is the only language supported by Unity. Details about our VR integration are in our GitHub README. The rest of our project was primarily focused on implementing features into the game, which included a UI displaying the current scoreboard and timer countdown. Additionally, we wanted to enhance the UX by adding sound effects to every player action, creating a more dramatic object death though death particles emission and ammunition.